Machine learning is a complex field of artificial intelligence focused on automating analytical model building. The exponential growth of data volume opens up possibilities for advanced data analysis technique research in machine learning model development. Based on allowing models to learn from data, they require minimal human intervention proving their efficiency over human labor.

Common issues faced when training a machine learning model

While a well-working machine learning model that can complete its task well is always the ultimate goal, the reality is that sometimes data or parameter-related issues prevent it from reaching its full potential. There are numerous problems you can face when training your machine learning model, including the following:

- Poor quality or biased data. The outcome of the machine learning model directly depends on the quality of the data you feed the algorithm. Since the model is sensitive to the data it works with, enough errors within a dataset may result in large-scale inaccuracies once the model is trained. This also applies to data bias. If we feed an algorithm with images of horses, for instance, in a green background and dogs in only white background, the trained model might recognize a horse in the white background as a dog.

- Insufficient data. Machine learning models require a certain amount of data for effective analysis, training, and performance. Consequently, the model’s performance suffers owing to a lack of access to the data or a shortage of relevant images. For instance, if you do not have that many accessible and reliable images of pneumonia-infected lungs, the model you wish to train on this data will not have enough information to learn from creating high variance between performance on training data and real-world cases.

- Non-representative training data. When choosing data for the machine learning model training, it is important to remember that its purpose is to make decisions about the corresponding population. Therefore, in order for the model to generalize well and provide great results in real-world scenarios, the training data must be an accurate representation of the population. Otherwise, any model’s decision based on this sample data will not apply to its population.

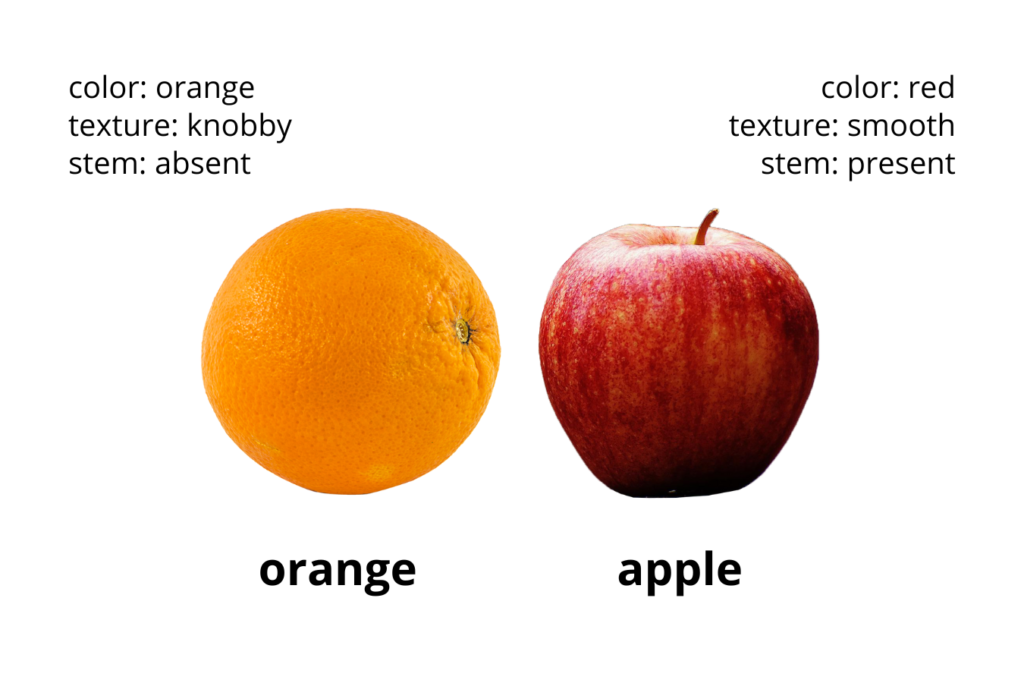

- Choosing irrelevant features. Feature selection in machine learning is a crucial task that impacts the model’s performance. Although nowadays neural networks are fairly good at distinguishing between relevant and irrelevant features automatically, manual feature selection can improve the network’s accuracy and training time even better. For instance, if we are building a model that needs to classify between an apple and an orange, other features such as the fruit’s size and shape are irrelevant while skin texture, the presence of a stem, and color are relevant. Not defining the features to remove leads to the model learning from noisy data and, therefore, not performing that well.

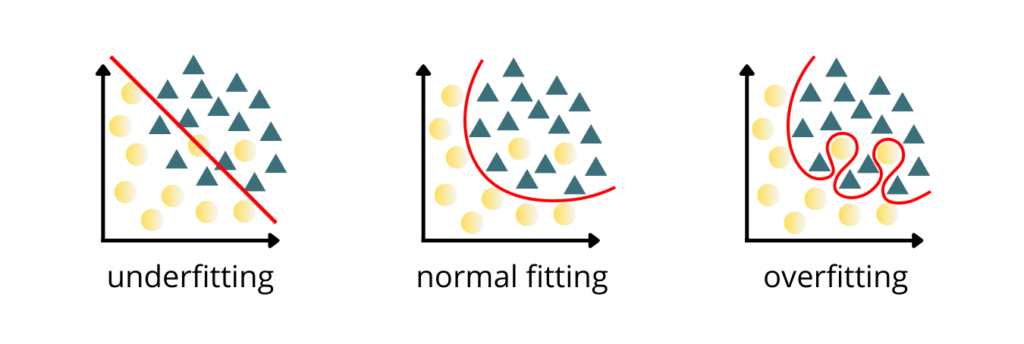

- Model underfitting. It occurs when we have too few relevant features or less data to build an accurate model, or when we are oversimplifying the problem by removing those relevant features. Due to the oversimplification, the machine learning model is unable to fixate on the relationship between the input attributes and the target variable, which leads to the model not performing well on the training data.

- Model overfitting. By contrast, model overfitting occurs when the model is overtrained, does not have enough input data, or the design is too complex. The machine learning model memorizes everything, including noise within the visual data, from the training dataset rather than learning from the data. It results in the model’s inability to generalize and work well on unseen datasets. Hence, such a model does not prove its use in the computer vision tasks it was designed for.

Although it may seem that there is a lot that can go wrong when training a machine learning model, there are as many solutions that help prevent such issues.

Below, we will discuss machine learning model overfitting and how the data needs to be prepared to make sure it is avoided as much as possible.

How to prevent machine learning model overfitting?

Model overfitting is a prevalent issue in training computer vision models. Once the machine learning model is trained, an overfitted model can be determined by low error rates and high variance. One of the earliest pre-processing steps is to split the dataset into training and validation samples, which helps us evaluate the model performance.

While it is not possible to know whether the model will overfit beforehand, there are several ways to prepare your dataset to prevent it as much as possible.

Improve your dataset

- Use more data. Expanding the training set with clean and high-quality data may increase the accuracy of the model. Make sure to include only clean and relevant data, otherwise, the model might become too complex increasing the risk of overfitting.

- Increase data variety. In machine learning, data variety is the diversity of data within the problem scope. For example, if you wish to train a classification model to distinguish different types of footwear, you have to make sure that all its categories are present. This includes shoes ranging from sneakers, boat shoes, and flip flops, to high heels, ballet flats, and cowboy boots. By having a full understanding of what the shoe is and what it can look like, the machine learning model will be able to generalize unseen data. For more increased variety, add images with diverse backgrounds, lighting conditions, object sizes, and a various number of objects of interest in the image.

Use regularization techniques

Regularization improves the generalization of the algorithm by making minor changes to the dataset. Some examples of the most common ones are listed below.

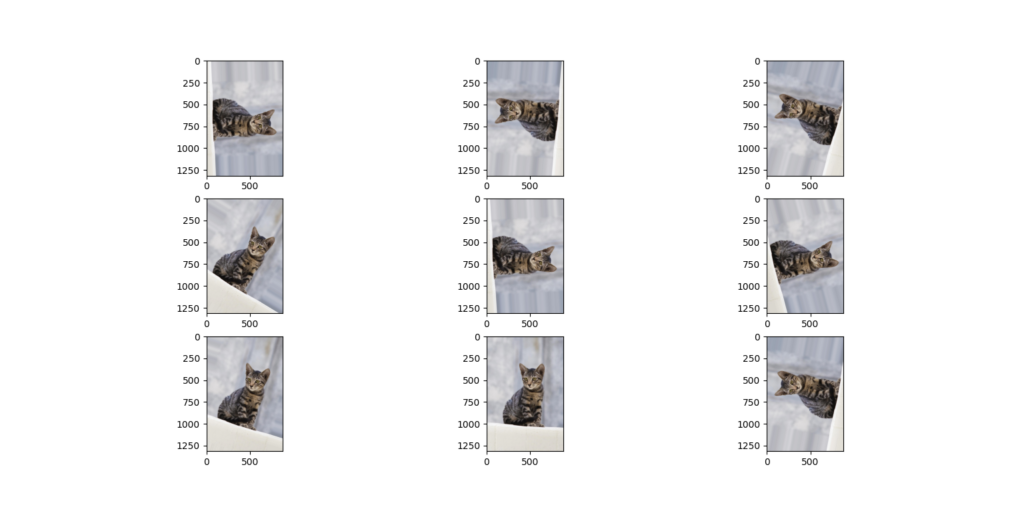

- Data augmentation. If you do not find enough relevant data for your dataset, you can increase your training set by manipulating and modifying the existing data yourself. Your existing visual data can be rotated, skewed, distorted, scaled up or down, or sheared to artificially extend your training set.

- Early stopping. One of the biggest issues in training a machine learning model is to decide how long a desirable training process should be. At some point during the training, the model stops generalizing and starts learning irrelevant statistical noise within the data. Therefore, training it too little might result in underfitting while too long might cause the model to overfit. A solution would be to stop training as soon as the performance on the validation dataset starts declining. This simple technique can not only reduce overfitting but also improve the generalization of the neural network.

- L1 and L2 regularization. The L1 technique adds the sum of the absolute values of the weights to the loss calculation and encourages the use of zero-weighted weights, resulting in a network that is more sparse. On the other hand, L2 regularization adds the sum of squared values of weights to the loss calculation, resulting in less sparse weights.

- Dropout. This is another technique used to address the overfitting problem that works by making some neurons inactive. By determining a probability of a neuron being inactive, called the dropout rate, we train the network with fewer neurons which reduces its complexity.

Knowing what will work best for your specific machine learning model depends on your data and the task it is being trained for. After the model is trained, its performance can be tested on a separate set of data to see how it generalizes to unseen data.

Brief summary on how to prevent overfitting

A model that generalizes to unseen data and returns accurate predictions is the ultimate goal in training a machine learning model. In reality, the data, model features, or training time can cause the model to run into some issues. One of the biggest ones – overfitting – occurs when the machine learning model memorizes everything from the training dataset rather than learning from it.

There are several techniques that can be implemented to reduce the risk of overfitting ranging from data augmentation, increased data variety, and the use of a bigger amount of clean data, to choosing the right features and stopping early if there is no more improvement in the validation set. Since every computer vision task is different and highly depends on its data, finding the right techniques for model training may require some time and patience.

SentiSight.ai helps you prevent machine learning model overfitting

There are several ways you can prevent your machine learning model from overfitting when using the SentiSight.ai computer vision platform:

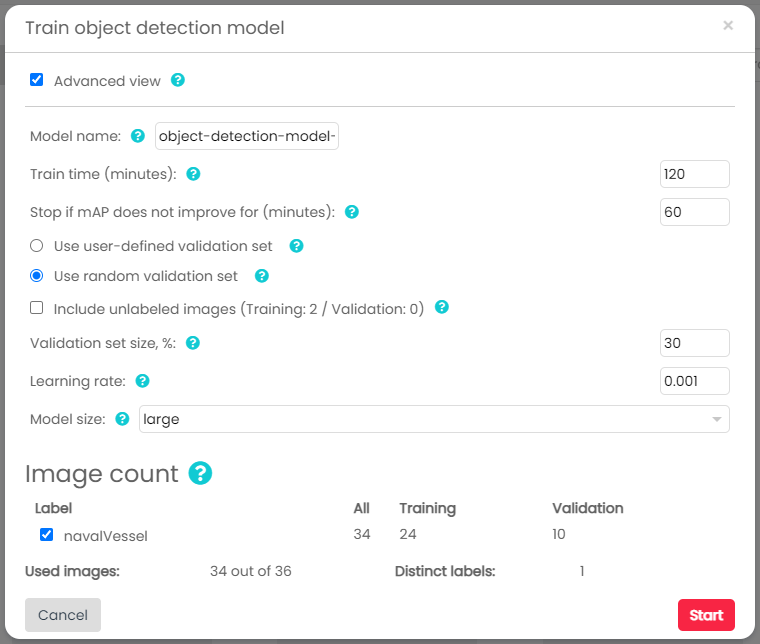

- Choose a validation set and define its size. After the model training, a validation set is used for an independent model evaluation which allows us to verify whether the model turned out overfitted. In case you choose a random validation set, the platform will automatically select a percentage of the images to use for validation where the visual data will be picked at random. On the other hand, by choosing a specific validation set you will be required to manually add images to the validation set from the dashboard.

- Choose a learning rate suitable for your project. During each training step, the learning rate influences how much we alter the model weights. The faster the machine learning model is trained, the higher the learning rate. Keep in mind that a high learning rate may cause the model to fail to converge optimally, while a low learning rate increases the risk of overfitting since there is a bigger chance to fall into the local minimum.

- Include your unlabeled images as a negative sample. When training an object detection model, you are able to include unlabeled images. In such a case, the unlabeled data will be used as a negative sample in the training.

- Choose the training time and configure stopping parameters. This choice determines how long the model will be trained. In most cases, the more time you spend training the machine learning model, the more accurate it becomes. However, as mentioned earlier, a long training time might result in the model stopping improving and starting learning the noise from data resulting in an overfit model.

The stopping configuration defines when the model training will be stopped if the performance on the validation set does not improve for the chosen amount of time (in minutes). Stopping the training earlier does not give enough time for the model to start memorizing data instead of learning from it. As a result, preventing the overfitting of the machine learning model.

- Make use of SentiSight.ai model fine-tuning properties. The models at SentiSight.ai use pre-trained model checkpoints, trained on large amounts of data, and fine-tune according to the task. When the training is started from a checkpoint using a small learning rate the model is less likely to overfit and still achieves good results when using small amounts of data. This is because the model was first trained to detect a wide variety of objects which is a complicated task and it only needs to be adjusted slightly to perform more specific tasks.

Conclusion

SentiSight.ai is an online image recognition platform that allows its users to label their dataset, and train object detection, image classification, or instance segmentation models. These models can be deployed to improve various industries such as defect detection in manufacturing or retail just to name two.

To get started with your project, check our blog post library about how to choose the right image labeling tool for the job and how to manage the project when working in a team.

For more information or assistance with your custom projects, contact us directly. SentiSight.ai machine learning platform is here to assist you in completing the project you have always dreamed of.