As artificial intelligence (AI) models continue to grow in complexity and scale, the demand for more efficient and powerful computing hardware has never been greater. Existing computing architectures and silicon technologies, however, have reached a point of diminishing returns, just as AI applications require exponentially more processing power. “While GPUs are the best available tool today,” said Kailash Gopalakrishnan, co-founder of EnCharge AI, “we concluded that a new type of chip will be needed to unlock the potential of AI.” This pursuit of innovation has led to groundbreaking research efforts aimed at reimagining AI chips to address critical bottlenecks in memory and energy efficiency.

The Limitations of Current AI Hardware

For over a decade, AI advancements have largely been powered by graphics processing units (GPUs), which have become essential for training and deploying AI models. However, the industry’s rapid growth has exposed fundamental limitations in these architectures. The demand for computational power has skyrocketed, with AI workloads requiring a millionfold increase in processing capabilities between 2012 and 2022. Despite packing billions of transistors onto a single chip, modern GPUs are constrained by inefficiencies in data movement and energy consumption. Naveen Verma, a Princeton professor leading a research effort into AI chip innovation, points out that GPUs struggle with “bottlenecks in memory and computing energy,” creating a significant barrier to unlocking AI’s full potential.

The urgency for new solutions has been underscored by Nvidia’s soaring valuation, driven largely by the sales of AI inference chips—used to run models after training, rather than developing them. Verma sees the greatest opportunity for impact in inference, emphasizing the need to decentralize AI beyond data centers and into everyday devices such as phones, laptops, and industrial equipment.

Reimagining AI Chips: A Three-Part Approach

To address these challenges, Verma’s team at Princeton, in collaboration with DARPA and EnCharge AI, is pioneering a novel chip architecture with three key elements. The first innovation lies in in-memory computing, an approach that eliminates the traditional separation between data storage and processing. Instead of constantly moving data between memory and the processor, computations are performed directly within memory cells, drastically reducing energy consumption and processing time.

The second component of their approach is analog computation, which departs from the conventional reliance on digital processing. Unlike digital circuits that process information in binary form, analog computation leverages the physical properties of devices, such as electrical charge storage in capacitors. This method offers higher efficiency and density but historically posed challenges in precision. Verma and his team have tackled this limitation by refining control mechanisms to achieve unprecedented accuracy.

Finally, the third breakthrough comes from utilizing capacitor-based computing, which harnesses the precise geometric properties of capacitors to perform calculations with minimal dependency on environmental factors like temperature. “They only depend on geometry,” Verma explains, emphasizing that modern semiconductor manufacturing can achieve exceptional accuracy in controlling these properties. This enables the creation of chips that are not only energy-efficient but also scalable and manufacturableusing existing fabrication techniques.

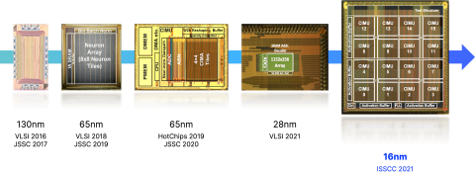

Image Source: EnCharge AI

The Road Ahead for AI Hardware

The Defense Advanced Research Projects Agency (DARPA) has recognized the potential of this new AI chip paradigm by awarding an $18.6 million grant to Princeton’s research effort. This funding aims to accelerate the development and commercialization of these revolutionary chips, with the ultimate goal of moving AI processing out of centralized data centers and into diverse environments, from hospitals and highways to consumer devices and even space applications.

Verma envisions a future where AI is seamlessly integrated into everyday life without being tethered to power-hungry data centers. “You unlock it from that,” he said, “and the ways in which we can get value from AI, I think, explode.”

Conclusion

The limitations of current AI hardware have made it clear that a fundamental shift is needed to sustain the growth of AI applications. The collaborative efforts led by Princeton and supported by DARPA are pushing the boundaries of what’s possible with AI chips, paving the way for more efficient, scalable, and decentralized computing. As Verma and his team continue to refine their groundbreaking approach, the future of AI may soon be defined by chips that go beyond transistors, delivering computational gains that unlock AI’s true potential across the globe.

Sources: Princeton Engineering