In today’s data-driven world, artificial intelligence has transformed from a futuristic concept into a practical necessity across industries. Behind every AI breakthrough—whether it’s a chatbot that convincingly mimics human conversation or algorithms that detect diseases from medical images—lies a sophisticated foundation that makes these innovations possible. This foundation is AI infrastructure.

Understanding the Core of AI Innovation

AI infrastructure represents the complete hardware and software ecosystem specifically designed to support artificial intelligence and machine learning workloads. Unlike traditional IT infrastructure, which primarily serves general computing tasks, AI infrastructure addresses the unique, resource-intensive demands of developing, training, and deploying AI models.

Think of AI infrastructure as the engine room of modern intelligence systems—invisible to end users but absolutely critical to performance. Without properly designed AI infrastructure, even the most brilliant algorithms falter under real-world conditions.

Why Traditional IT Infrastructure Falls Short

The computational demands of AI differ fundamentally from conventional software applications. Traditional IT setups—built around CPUs and on-premises data centers—simply can’t handle the parallel processing requirements and massive data throughput needed for modern AI workloads.

AI systems process enormous datasets through complex mathematical operations that benefit from specialized hardware architectures. The difference isn’t subtle—in some cases, purpose-built AI infrastructure can accelerate model training by orders of magnitude compared to general-purpose computing environments.

Essential Hardware Components

Graphics Processing Units (GPUs)

At the heart of modern AI infrastructure lies the GPU. Originally designed for rendering complex graphics, these processors have become indispensable for AI tasks due to their ability to perform thousands of identical operations simultaneously.

GPU servers integrate multiple graphics processors within a server framework, creating powerful computing clusters specifically optimized for the matrix and vector calculations prevalent in machine learning. Companies like NVIDIA dominate this space with specialized AI GPUs designed to handle the most demanding workloads.

Tensor Processing Units (TPUs)

TPUs represent the next evolution in AI-specific hardware. These custom-designed chips accelerate tensor operations—the mathematical backbone of many machine learning frameworks. Built from the ground up for AI workloads, TPUs provide exceptional throughput and efficiency for deep learning applications.

Unlike general-purpose processors, TPUs optimize specifically for machine learning operations, delivering performance improvements that directly translate to faster training times and more responsive AI applications.

AI Accelerators

Beyond GPUs and TPUs, the AI hardware ecosystem includes specialized accelerators designed for specific workloads or deployment scenarios:

- Field-Programmable Gate Arrays (FPGAs): Offering reconfigurable hardware that can be optimized for specific AI tasks

- Application-Specific Integrated Circuits (ASICs): Custom-designed chips that maximize performance for particular AI applications

- Edge Computing Devices: Specialized hardware that enables AI processing directly on IoT devices and sensors

High-Performance Computing Systems

For the most demanding AI applications—from simulating complex systems to training massive language models—high-performance computing (HPC) systems provide the necessary computational muscle. These systems combine powerful servers, specialized networking, and advanced cooling technologies to support AI workloads that would overwhelm conventional infrastructure.

Critical Software Elements

Machine Learning Frameworks

Machine learning frameworks provide the development environment and tools for building AI models. Popular options include:

- TensorFlow: Google’s open-source platform supporting a wide range of AI applications

- PyTorch: Facebook’s flexible framework favored for research and prototyping

- Keras: A high-level API that simplifies neural network construction

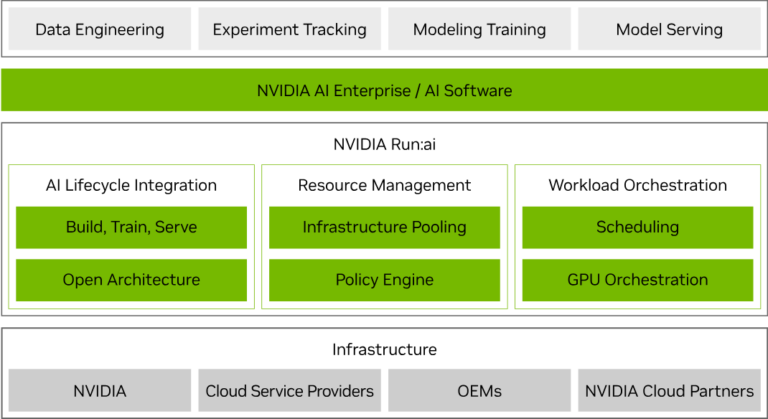

As an example, NVIDIA Run:ai provides a streamlined AI lifecycle experience, advanced AI workload orchestration with GPU management, and a robust policy engine that turns resource management into a strategic advantage, maximizing efficiency and aligning with business goals. Image credit: Nvidia

These frameworks abstract away much of the complexity in implementing machine learning algorithms, allowing developers to focus on model architecture rather than low-level implementation details.

# Simple PyTorch pseudocode example for neural network training

import torch

import torch.nn as nn

# Define a basic neural network

class SimpleNet(nn.Module):

def __init__(self):

super(SimpleNet, self).__init__()

self.fc1 = nn.Linear(784, 128)

self.fc2 = nn.Linear(128, 64)

self.fc3 = nn.Linear(64, 10)

self.relu = nn.ReLU()

def forward(self, x):

x = self.relu(self.fc1(x))

x = self.relu(self.fc2(x))

x = self.fc3(x)

return x

# Initialize the model

model = SimpleNet()

Data Processing Libraries

Before data reaches AI models, it typically undergoes extensive preparation and transformation. Data processing libraries streamline these tasks:

- Pandas: For data manipulation and analysis

- NumPy: Supporting large multi-dimensional arrays and matrices

- SciPy: Providing algorithms for optimization and statistical analysis

These tools form the foundation of the data pipeline feeding into AI systems, enabling efficient handling of datasets ranging from gigabytes to petabytes.

Orchestration and Management Systems

AI infrastructure requires sophisticated management tools to coordinate resources, schedule workloads, and monitor performance. Examples include:

- NVIDIA Run:ai: Providing advanced orchestration for AI workloads and GPU resources

- Kubernetes: Enabling containerized deployment of AI applications

- MLflow: Tracking experiments and managing the machine learning lifecycle

Effective orchestration ensures maximum utilization of expensive computing resources while giving data scientists the flexibility to experiment and innovate.

Storage Architecture for AI

AI workloads place unique demands on storage systems. The ideal storage architecture balances several competing priorities:

- Capacity: Managing potentially petabytes of training data

- Performance: Delivering data to compute resources fast enough to avoid bottlenecks

- Accessibility: Making data available across distributed computing environments

- Cost-efficiency: Optimizing storage tiers to balance performance and budget constraints

Modern AI storage solutions often implement hierarchical approaches, with frequently accessed data stored on high-performance flash storage while archival data resides on more economical platforms.

The AI Lifecycle and Infrastructure Requirements

Different phases of the AI lifecycle place different demands on infrastructure:

Development and Experimentation

During initial model development, data scientists need flexible, interactive environments with good visualization tools. Infrastructure requirements include:

- Jupyter notebooks for interactive development

- Visualization libraries for exploring data relationships

- Quick iteration cycles for testing hypotheses

Training and Optimization

Model training represents the most computationally intensive phase, often requiring:

- Maximum GPU/TPU resources for parallel processing

- High-bandwidth, low-latency interconnects between compute nodes

- Efficient data pipelines to keep processing units fed with training examples

Deployment and Inference

Once trained, models must be deployed to production environments where they can deliver value:

- Edge devices may require optimized, lightweight models

- Real-time applications demand low-latency inference capabilities

- High-volume services need scalable, resilient infrastructure

Building an AI Factory: The Modern Approach

The concept of an “AI factory” has emerged as a framework for systematically producing AI solutions at scale. This approach integrates all infrastructure components into a cohesive system supporting the entire AI lifecycle.

Key elements of the AI factory approach include:

- Self-service platforms allowing data scientists to provision resources on demand

- Standardized workflows ensuring consistent quality and reproducibility

- Automated pipelines streamlining the progression from development to deployment

- Centralized governance maintaining security and compliance throughout

This factory model transforms AI development from a series of one-off projects into a streamlined production process, dramatically increasing an organization’s capacity to deliver AI solutions.

Cloud vs. On-Premises AI Infrastructure

Organizations face a critical choice between cloud-based and on-premises AI infrastructure. Each approach offers distinct advantages:

Cloud-Based AI Infrastructure

- Advantages: Rapid scaling, pay-as-you-go economics, access to the latest hardware

- Considerations: Potential data transfer bottlenecks, long-term cost management

Major cloud providers like AWS, Microsoft Azure, and Google Cloud offer specialized AI services that eliminate much of the complexity in building and maintaining infrastructure.

On-Premises AI Infrastructure

- Advantages: Complete control, potentially lower latency, data sovereignty

- Considerations: Significant capital investment, technology obsolescence risk

Organizations with specific security requirements or existing data center investments may prefer on-premises solutions despite their higher management overhead.

Hybrid Approaches

Many enterprises adopt hybrid strategies, maintaining sensitive workloads on-premises while leveraging cloud resources for burst capacity or specialized capabilities not available internally.

The Hidden Challenges of AI Infrastructure

Beyond the technical components, organizations deploying AI infrastructure face several systemic challenges:

Underutilized Resources

Fixed GPU allocations often lead to inefficient resource usage, with expensive hardware sitting idle during development downtimes or between training runs. Dynamic orchestration solutions address this by intelligently pooling resources across teams and workloads.

Infrastructure Fragmentation

As AI initiatives spread across an organization, disconnected infrastructure islands emerge—each with its own tools, policies, and resource pools. This fragmentation increases management complexity and security risks while limiting scalability.

Lengthy Development Cycles

Without streamlined infrastructure, teams waste valuable time provisioning resources, configuring environments, and managing dependencies. This administrative overhead extends development cycles and delays business impact.

Static Resource Allocation

Legacy approaches force predetermined resource allocations that prevent teams from sharing computing power flexibly as needs evolve. Modern infrastructure implements dynamic allocation that adapts to changing priorities.

Building Effective AI Infrastructure: Key Steps

Organizations looking to establish or upgrade their AI infrastructure should consider these practical steps:

1. Define Clear Objectives and Budget

Begin by establishing specific goals for your AI initiatives and aligning infrastructure investments accordingly. Different use cases—from computer vision to natural language processing—may benefit from different hardware configurations and software stacks.

2. Select Appropriate Hardware Components

Choose hardware matched to your AI workloads, considering factors like:

- Model complexity and size

- Data volume and velocity

- Performance requirements

- Budget constraints

For many organizations, a heterogeneous mix of computing resources proves most effective, with specialized hardware for specific workloads.

3. Implement Robust Networking Solutions

High-bandwidth, low-latency networks form the connective tissue of AI infrastructure. Technologies like InfiniBand or high-speed Ethernet ensure data moves efficiently between storage, compute, and delivery systems.

Advanced networking technologies like 5G enable both public and private network instances, providing additional layers of security and customization while supporting massive data transfers.

4. Establish Governance and Compliance Measures

As AI applications handle increasingly sensitive data, infrastructure must incorporate robust security and governance controls:

- Data encryption at rest and in transit

- Access control systems

- Compliance monitoring for regulatory requirements

- Model governance and version control

5. Create Integration Pathways

For maximum value, AI infrastructure should connect seamlessly with existing business systems. This integration enables AI applications to access operational data and deliver insights directly to decision-makers.

6. Build for the Future

AI technology evolves rapidly, making future-proofing essential. Design infrastructure with modular components that can be upgraded individually as technology advances.

Measuring AI Infrastructure Success

Effective AI infrastructure delivers measurable improvements across several dimensions:

- Increased GPU availability: Enabling more concurrent experimentation

- Higher resource utilization: Maximizing return on infrastructure investments

- Faster workload execution: Accelerating time-to-insight

- Reduced management overhead: Freeing technical teams to focus on innovation

Together, these metrics indicate an infrastructure that supports rather than hinders AI innovation.

The Future of AI Infrastructure

Looking ahead, several trends will shape the evolution of AI infrastructure:

- AI-specific processor architectures optimized for emerging models and techniques

- Software-defined infrastructure providing greater flexibility and automation

- Edge-cloud integration enabling distributed AI processing

- Energy-efficient computing addressing the environmental impact of AI workloads

AI infrastructure provides the foundation upon which modern artificial intelligence capabilities are built. By thoughtfully designing and implementing the hardware, software, and management components of this infrastructure, organizations can accelerate their AI initiatives while controlling costs and ensuring scalability.

If you are interested in this topic, we suggest you check our articles:

- Difference between Reactive AI vs Predictive AI

- Artificial Intelligence in Manufacturing

- Who Created Artificial Intelligence? The AI Evolution Explained

Sources: Nvidia, IBM, Supermicro, Technology.org

Written by Alius Noreika