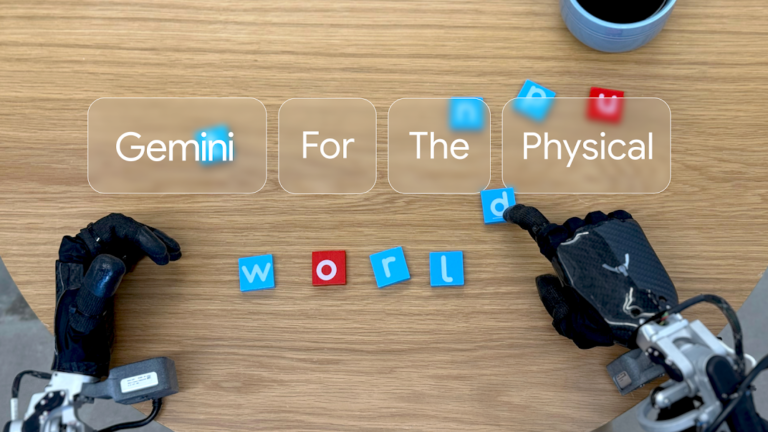

Google DeepMind’s breakthrough AI models are transforming robotics with unprecedented capabilities in generalization, interaction, and dexterity.

Bridging Digital Intelligence with Physical Action

The digital realm of artificial intelligence is crossing into the physical world through Google DeepMind’s latest innovations: Gemini Robotics and Gemini Robotics-ER. These groundbreaking AI models, built on the foundation of Gemini 2.0, represent a significant leap forward in robotic capabilities, enabling machines to perform complex real-world tasks without specific prior training.

“We’re very focused on building the intelligence that is going to be able to understand the physical world and be able to act on that physical world,” explains Carolina Parada, senior director and head of robotics at Google DeepMind. This vision drives the development of robots that can navigate our complex environments with human-like understanding.

Gemini Robotics: The Three Pillars of Advanced Robotics

Google DeepMind’s Gemini Robotics is an advanced vision-language-action (VLA) model that extends Gemini 2.0’s capabilities by adding physical actions as a new output modality. This model excels in three critical areas essential for building truly helpful robots:

Unprecedented Generalization Abilities

Perhaps the most remarkable feature of Gemini Robotics is its ability to generalize to novel situations—tackling tasks it has never encountered during training. By leveraging Gemini’s deep understanding of the world, these robots can adapt to new objects, diverse instructions, and unfamiliar environments on the fly.

According to Google DeepMind’s technical report, Gemini Robotics more than doubles performance on comprehensive generalization benchmarks compared to other state-of-the-art VLA models. This adaptability eliminates the need for task-specific programming, dramatically expanding a robot’s potential applications.

Intuitive Human-Robot Interaction

For robots to integrate seamlessly into our daily lives, they must interact naturally with people and respond intelligently to dynamic environments. Gemini Robotics achieves this through advanced language understanding capabilities inherited from Gemini 2.0.

The model understands and responds to commands phrased in everyday, conversational language across multiple languages. It continuously monitors its surroundings, detects environmental changes, and adjusts its actions accordingly—enabling natural collaboration between humans and robotic assistants.

Enhanced Dexterity for Complex Tasks

The third critical advancement in Gemini Robotics is its remarkable dexterity. Many everyday tasks that humans perform effortlessly—like folding paper or packing a lunchbox—require surprising fine motor precision that has traditionally challenged robots.

Gemini Robotics overcomes these limitations, demonstrating the ability to tackle multi-step tasks requiring precise manipulation. From origami folding to removing bottle caps, these robots showcase dexterity that approaches human-like capabilities.

Gemini Robotics-ER: Understanding Our Three-Dimensional World

Alongside Gemini Robotics, Google DeepMind has introduced Gemini Robotics-ER (Embodied Reasoning)—an advanced visual language model designed specifically to enhance spatial understanding for robotics applications.

This model significantly improves upon Gemini 2.0’s existing abilities in areas like 3D detection and pointing. By combining spatial reasoning with Gemini’s coding capabilities, Robotics-ER can instantiate entirely new functions on demand.

“When you’re packing a lunchbox and have items on a table in front of you, you’d need to know where everything is, as well as how to open the lunchbox, how to grasp the items, and where to place them,” Parada explains. “That’s the kind of reasoning Gemini Robotics-ER is expected to do.”

The model demonstrates remarkable versatility, performing all steps necessary to control a robot out of the box—including perception, state estimation, spatial understanding, planning, and code generation. In end-to-end applications, Gemini Robotics-ER achieves a 2-3x success rate compared to Gemini 2.0.

Partnerships and Future Applications

Google DeepMind isn’t developing these technologies in isolation. The company is partnering with Apptronik to build next-generation humanoid robots powered by Gemini 2.0. Additionally, “trusted testers” including Agile Robots, Agility Robotics, Boston Dynamics, and Enchanted Tools have been granted access to the Gemini Robotics-ER model.

These collaborations point toward a future where intelligent robots could transform industries ranging from manufacturing and healthcare to home assistance and beyond.

Safety as a Foundation

As with any advanced AI technology, safety remains paramount. Google DeepMind researcher Vikas Sindhwani described the company’s “layered approach” to robot safety, noting that Gemini Robotics-ER models “are trained to evaluate whether or not a potential action is safe to perform in a given scenario.”

Building on its “Robot Constitution”—a set of Isaac Asimov-inspired rules introduced last year—Google DeepMind is also releasing new benchmarks and frameworks to advance safety research across the AI industry.

The Path Forward

Google DeepMind’s Gemini Robotics and Gemini Robotics-ER represent significant milestones in the journey toward generally capable robots that can assist humans in meaningful ways. By combining advanced AI with physical embodiment, these technologies bring us closer to a world where robots can seamlessly integrate into our daily lives—understanding our needs, adapting to our environments, and performing complex tasks with human-like dexterity.

As research continues and partnerships expand, we may soon witness robots that not only understand our world but can actively and safely participate in it—transforming the relationship between humans and machines in profound way.

If you are interested in this topic, we suggest you check our articles:

- Google Gemini: How has it been received by users so far?

- Google’s Gemini 2.0 Flash Thinking Update

- AI Chatbot Assistant for Business – Friend or Foe?

Written by Alius Noreika