SentiSight.ai’s platform is constantly evolving and developing the image annotation tool features and capabilities it has available for its users. This article will guide you through the labeling processes within our company, showcasing how the platform can be used to solve real-world problems.

Our labeling team

While labeling images is a very important step in a supervised machine learning project, it does not require any special equipment. Because of this, our current data annotation team consists of 7 people flexibly working from their location of choice – whether that be our local offices or off-site, the only requirements are having access to the online or offline labeling tool and the project’s dataset.

Labeling is a tedious job requiring annotators to sit in front of their computers throughout the day, yet with the right tools, it can be done without much difficulty.

Labeling within the company

Every project starts from an instruction set provided by the supervisor. It usually consists of a full description of the task:

- What exactly is required?

- The time frame.

- Recommended software and labeling tools.

- Example image labeled beforehand.

This instruction set makes sure each team member is informed about the requirements so the resulting labels in the dataset do not vary too much. To paint a clearer picture, we have chosen a few of our past projects for further analysis.

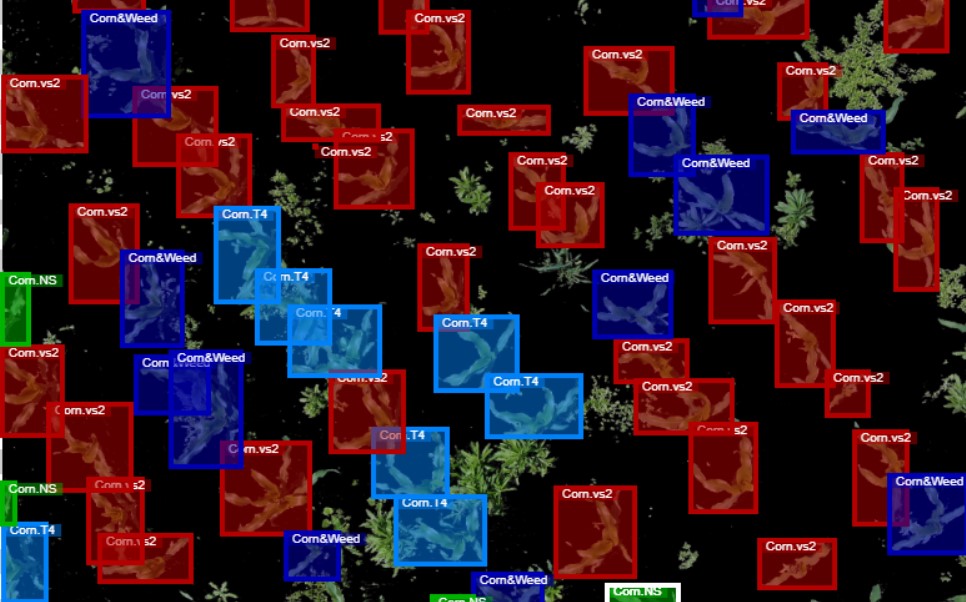

One of our clients in Argentina came to us with a request for a solution that would recognize and classify crops and weeds. The dataset was not very large, therefore, they decided to label it themselves. SentiSight.ai’s annotation tool is very convenient for this purpose because it can be accessed from anywhere in the world and the client does not need to install any additional software to label the images.

As a pre-processing step, the background soil was removed from the images to get a clearer view of the crops and the images were passed to the client to label them. In total, it took around 20 hours to label all of the data. After that, an object detection model was trained to detect different types of corn, and whether there were any weeds in the field.

Another project was requested by SentiVeillance SDK – a software development product specializing in surveillance, people and vehicle tracking and classification, plus licence plate recognition proprietary software developed at Neurotechnology. The team of labelers received an instruction set requiring the following tasks to be completed:

- Label the middle of a licence plate with a point – a model trained on this dataset was used to find the location of a licence plate.

- Label the full licence plate with a polygon tool to show its four corners – a model trained on this dataset was used to detect the full licence plate.

- Label each character of the licence plate with a bounding box – a model trained on this dataset was used to perform optical character recognition to extract the licence plate number.

A team of 5 people shared the tasks between them and finished labeling a dataset of 3269 images in 18 hours. Depending on the project’s complexity and the annotator’s experience, annotating an image took between 15 seconds and a few minutes.

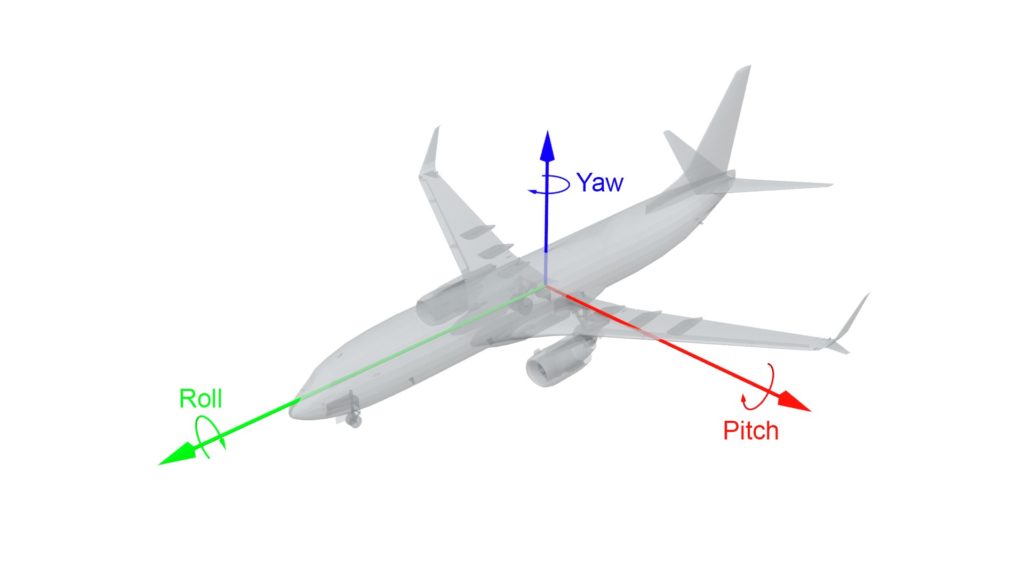

Currently, the labeling team is working on several projects, one of them is labeling the human head pose. In three-dimensional space, pose can be described via three angles – roll, pitch and yaw. Usually, they are explained as aircraft principal axes, showcasing how an aircraft in flight is able to rotate. Rotation around the front-to-back axis is called roll, around the side-to-side axis pitch and around the vertical axis – yaw.

This labeling task requires fixing the existing roll, pitch and yaw angles on human faces, indicating their facing direction. Since the dataset is quite small, containing only around 8000 images, one person assigned to the task is able to successfully take care of them.

Needless to say, we do run into some obstacles, the most common being new annotators labeling so meticulously, therefore spending too much time on one task. Accurate labeling is a highly desired skill, yet there needs to be a balance between speed and quality to perform well on a job like this. Moreover, in some cases, machine learning algorithms are able to retrieve the required data without pixel-sized accuracy. Another issue we sometimes run into is varying comprehension of the task, although we are successfully solving this with more informative and simpler instructions given prior to the task.

Once the project has finished, the quality of work of each annotator is then judged on the following categories:

- The amount of time they have spent on the project;

- The combination of accuracy and speed;

- The amount of communication with the annotator in regards to constructive criticism during the project.

These parameters are then discussed during monthly performance reviews.

Enjoy the benefits

The SentiSight.ai platform provides numerous useful features for annotators and their supervisors to manage the team better:

- Informative statistics. To make sure employees get the pay they deserve, it is necessary to know how much time and effort they have put into the task. Backend calculations on the SentiSight.ai platform take care of this by recording the time a mouse was actively labeling images, excluding its idle state. Moreover, by navigating to Wallet > See time, project managers are able to view and download the summarized labeling time statistics, filtering them by project, user or timeframe. The feature is advantageous both during performance reviews with the team and when generating the needed data for the payroll department.

- Filtering options. A feature extremely useful to both annotators and their managers is making sure no images or labeling errors are left behind. It allows filtering by type, by labels and by image status, and its diverse use components offer numerous filtering variations. For instance, by selecting Not and Seen by you, the annotators are able to view and label only the images they have not yet seen. Furthermore, by choosing Labeled by another user together with Not and Seen by you, they would be able to review their colleague’s labeled images and skip the ones that are already reviewed. Please, note that if someone else edits the image, its status will be set to “not seen” for all other users, that way everyone will be able to keep track of any new edits.

- Immediate feedback. In our previous article, we have discussed the main pros and cons of using online and offline labeling tools. While offline tools allow users to keep the dataset on their local device, they do not allow supervisors to keep track of work progress in real-time, therefore, if the dataset was labeled incorrectly, the supervisor would find out about this only when the annotator submits a portion of images for review. If those image portions are large, this might result in a lot of images labeled incorrectly. On the contrary, working online via the SentiSight.ai platform allows managers to oversee the progress every step of the way, allowing them to give immediate feedback if something goes wrong.

Conclusion

Although labeling a large dataset is a labour intensive process, it does not feel that way when the most suitable labeling tool kit for the job is chosen. At SentiSight.ai we offer an intuitive image labeling platform designed for the best user experience, no matter whether it is for a beginner or an expert. Various annotation options allow labeling bounding boxes, polygons, points, polylines together with smart labeling tools for labelers while filtering and time tracking features ease the project manager’s job. Check out our image tabeling tool user guide for more information on the available intuitive features.

These can help you train your own model to deploy within your industry of choice, whether that be defect detection in manufacturing or image recognition in retail, to name two examples.

Get started on labeling your dataset using the SentiSight.ai platform. For more information on how to manage your project or how to choose the right labeling tool, check our latest posts.