Even though object detection seems like an innovative computer vision technology, it has been all around us since the early 1960s. Its first applications included character pattern recognition systems in office automation related tasks, assembly and verification processes in the semiconductor industry that directly contributed to various countries’ economic development.

A decade later they were implemented in the pharmaceutical industry for drug classification, chromosome recognition tasks in the biomedical research field and bill recognition tasks within ATM machines.

These traditional object detection methods followed template matching and sliding window approach, when in order to detect an object in an image, you had to slide its template across the picture starting from the top left corner. For every position you would get either a high or a low correlation score, depending on how well the template matched the object of interest. However, this method was not very accurate if the template picture had a different pose, size, scale, or if the object of interest was occluded.

Traditional object detection approaches

Viola-Jones detector

In 2001, the Viola-Jones detector [1] was proposed to solve a face detection problem in computer vision, the problem being computer’s need for precise instructions and constraints to complete a task.

Their detector was based on a feature extraction and classification method that allowed them to diligently analyze an image to see if any of it contains a human face. To achieve that the algorithm had to go through four stages:

- Selecting Haar-like features – black and white rectangular boxes combined in such a ratio that represents specific features of a human face, for instance, lighter upper-cheeks and darker eye regions.

- Creating an integral image in order to evaluate which parts of the image have the highest cross-correlation with the feature.

- AdaBoost training: selection of the best features and trained classifiers that use them.

- Cascading classifiers: responsible for filtering out sub-windows containing objects.

This detector was highly accurate and could be used in real time to successfully detect faces and non-faces, however, its training time was slow and it missed texture and shape information. Since it was developed primarily to solve a face detection problem, Viola-Jones detector was never generalized.

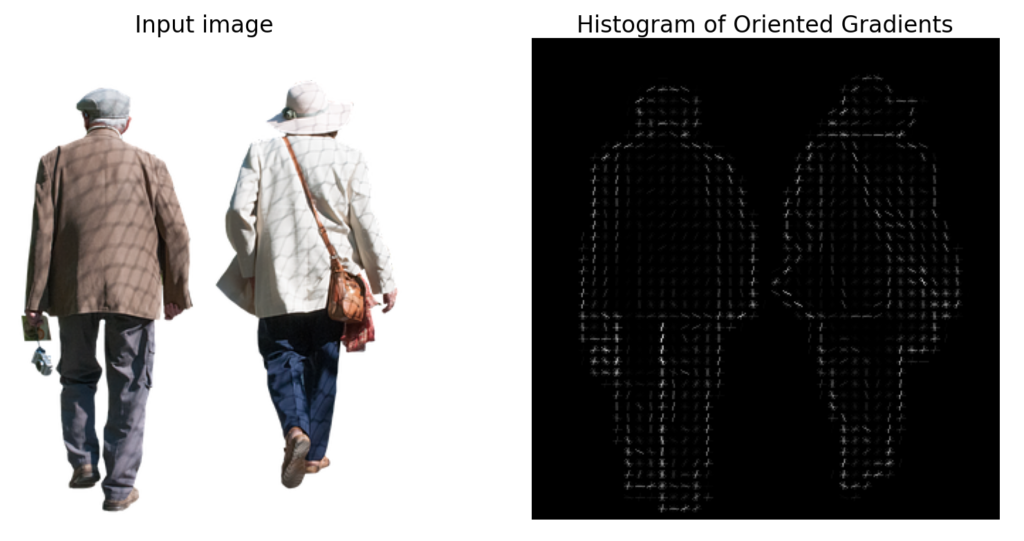

Histogram of oriented gradients

Another feature descriptor called the histogram of oriented gradients (HOG) [2] gained popularity after the Conference on Computer Vision and Pattern Recognition held in 2005

Mainly used in self-driving cars and other applications that require human detection, this method attempts to extract contrast in different regions of the image, based on an idea that the object’s shape can be defined by the length and density of gradient vectors.

After an optional gamma normalisation, the input image is divided into a dense grid that consists of connected cells and for every pixel gradient’s magnitude and angle are computed. Subsequently, for every cell a histogram of orientation is created and weighed by the magnitude of the gradient or a clipped version of it.

Locally normalising an image provides contrast normalisation which consequently improves accuracy. All the cells combined into blocks can be extracted as, so called, HOG features and used in a support vector machine (SVM) or any other machine learning algorithm for training on two data sets – images that contain an object of interest and the ones that do not. The HOG feature descriptor can be used to detect various objects, although it is best suited for human detection.

Deep learning approaches

AlexNet

Following technological advances large datasets started emerging to improve training of innovative AI focused algorithms and models. To improve their performance, the curators of one of the datasets called ImageNet started running an annual software contest ImageNet Large Scale Visual Recognition Challenge (ILSVRC).

As anticipated, the competition surpassed creators’ expectations and its 2012 winner convolutional neural network (CNN) classification model AlexNet [3]marked the start of modern history of object detection. It was a fast graphics processing unit’s implementation of a CNN, based on an old LeNet structure, combined with data augmentation that achieved the lowest error rates. The success resulted in AlexNet rejuvenating interest in neural networks and becoming the most influential innovation in computer vision at that time marking the beginning of the deep learning revolution.

Region proposals

The turning point for object detection technologies was when Region-Based Convolutional Neural Network (R-CNN) [4] was integrated into this field. The proposed model was based on a selective search algorithm extracting around 2000 region proposals (bounding boxes that may contain an object) which were then reshaped and passed on to a CNN for analysis. Features extracted in this process were used to classify region proposals and the bounding boxes were refined using a bounding box regression. This approach was accurate, yet slow and could not be implemented in real time, therefore, its successors quickly took over its place.

R-CNN variants Fast R-CNN [5] and Faster R-CNN [6] both attempted to solve their predecessor’s problems. By performing the convolutional operation only once per entire input image, they extracted a feature map from it which notably increased the model’s performance. Moreover, Faster R-CNN completely eliminated the time-consuming selective search algorithm by replacing it with a separate network to predict region proposals. This decision significantly improved the model’s test time and allowed it to be implemented in real-time object detection tasks.

You Only Look Once

Around the same time You Only Look Once (YOLO) [7] algorithm has emerged. As the name suggests, in this algorithm a single convolutional network is used to predict classes and bounding boxes in one evaluation. The input image is divided into squared cells and each of them are responsible for predicting a specific amount of bounding boxes. Boxes that have a low probability of an object are removed while those having a high probability of containing an object are refined so that they fit tightly around the objects of interest.

Depending on the model, YOLO processes images from 45 frames per second and makes fewer false positive predictions compared with other algorithms, qualifying it for real-time applications. However, due to its strong spatial constraints on bounding box predictions, there is a limited amount of nearby objects it can detect and small objects that appear in groups cause prediction discrepancies too. Needless to say, its updated versions, such as YOLOv2 [8]and YOLOv3 and YOLOv4 [9], improved the overall detection accuracy and were better at detecting small-scale objects.

Single Shot MultiBox Detector

As an improvement to previous state-of-the-art object detection technologies Single Shot MultiBox Detector (SSD) [10] was presented in 2016. Just like YOLO, it is a single-shot detector recognising multiple object categories. By using separate filters for different aspect ratio detections and a small convolutional filter applied to feature maps predicting object category scores and bounding box outputs, SSD achieves significantly more accurate and faster results, even when relatively low resolution input is given. Nonetheless, a few of its drawbacks include confusion between similar object categories and slightly worse accuracy on smaller objects.

From 2017 onwards

Nowadays object detection continues to be a prosperous field, covering areas such as pedestrian and traffic light/sign detection for autonomous vehicles, human-computer interaction, and face detection for security and identity checks.

Some of the latest additions to computer vision technologies are RetinaNet [11]and CenterNet [12]. The former, mainly used in aerial and satellite detection, has proved itself useful in working with small scale and dense objects. The latter is a new approach to object detection that detects center points of objects thus eliminating the need of anchor boxes that are used in other methods.

Due to object detection being a key ability for various computer and robot vision systems, never-ending research contributes to further progress. Adoption of machine learning methods and development of innovative representations improve our understanding in the field and certainly brings us closer to the future models that are capable of real-time open-world learning. SentiSight.ai’s object detection service incorporates the latest technologies and keeps them at user’s fingertips. We continuously try to improve our interface and make it as user-friendly as possible for beginners and experts alike.

Take a read of another article for a quick insight into how users can train their object detection model using SentiSight.ai.

References

- P. Viola and M. Jones, “Rapid Object Detection using a Boosted Cascade of Simple Features”, IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Mitsubishi Electric Research Laboratories, Inc., 2001.

- N. Dalal and B. Triggs, “Histograms of oriented gradients for human detection”, IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2005.

- A. Krizhevsky, I. Sutskever and G. Hinton, “ImageNet Classification with Deep Convolutional Neural Networks”, Neural Information Processing Systems, Vol. 25, 2012.

- R. Girshick, J. Donahue, T. Darrell and J. Malik, “Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation,” IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OHIEEE, 2014.

- R. Girshick, “Fast R-CNN”, IEEE International Conference on Computer Vision, 2015.

- S. Ren, K. He, R. Girshick and J. Sun, “Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks”, IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017.

- J. Redmon, S. Divvala, R. Girshick and A. Farhadi, “You Only Look Once: Unified, Real-Time Object Detection”, IEEE Conference on Computer Vision and Pattern Recognition, 2016.

- J. Redmon and A. Farhadi, “YOLO9000: Better, Faster, Stronger”, IEEE Conference on Computer Vision and Pattern Recognition, 2017.

- C. Kumar B., R. Punitha and Mohana, “YOLOv3 and YOLOv4: Multiple Object Detection for Surveillance Applications”, Third International Conference on Smart Systems and Inventive Technology, 2020.

- Chengcheng Ning, Huajun Zhou, Yan Song and Jinhui Tang, “Inception Single Shot MultiBox Detector for object detection”, IEEE International Conference on Multimedia, 2017.

- T. Lin, P. Goyal, R. Girshick, K. He and P. Dollár, “Focal Loss for Dense Object Detection”, IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017.

- X. Zhou, D. Wang and P. Krähenbühl, “Objects as Points”, arXiv:1904.07850, 2019.